Abstract

Uncertainty introduced by the testing method is virtually unavoidable. Testing activities can fail in many ways; however, you can prevent most problems through Risk analysis in the early test life cycle. Risk implies a potential negative impact on any of the test activities affecting the system either directly or indirectly. Steve Wakeland defines IT risk as ‘the likelihood that a program fault will result in an impact on the businesses’.

Risk based testing differs from the traditional way of system testing because it emphases on the various risks involved in the testing life cycle. Though the probability of the risk is known, the outcome remains unknown and hence we could define Risk as the product of the defects and probability.

The objective of our paper is to put forth the risk based test strategy which would help testers to identify the risks involved at the earlier stages of a test life cycle and the reduction of COPQ. The paper deals with the risk prediction and mitigation methodologies. The Metrics Based Management approach illustrated in our case study enabled us to identify the probability and the consequences of individual risks during the test life cycle.

A risk analysis was performed and the functions with the highest risk exposure, in terms of probability and cost, were identified. Risk prediction is derived from planning, assessing and mitigating risks. Risk prediction involves forecasting risks using the history and knowledge of previously identified risks. The risk mitigation activity is done to inspect and focus testing on the critical functions to minimise the impact a failure in the function.

The readability of the paper covers test engineers, test leads and test managers and others with the knowledge of software testing.

1. Introduction

Testing is the time consuming part of software engineering & it is the last phase in the Software Development Life Cycle .It has to be always done under severe pressure. It would be unthinkable to quit the job, to delay delivery or to test badly. The real answer is a differentiated test approach in order to do the best possible job with limited resources.

For getting more confidence that the right things are tested at the right time, risk based testing can help, as it focuses and justifies test effort in terms of the mission of testing itself. The basic principle of Risk Based testing is to test heavily those parts/components of the system that pose the highest risk to the project to ensure that the faultiest areas are identified. At the system level, one probably has to test first what is most important in the application & secondly, one has to test where one may find most defects.

This paper provides a method for Risk Based Testing. The test approach is explained in the following sections. The section 2 details on the basics of Risk based testing; the section 3 provides the high-level test process proposed for Risk based Testing; section 4 illustrated our test strategy using a case study.

2. Risk Based Testing

2.1 Risk

Risk is a possible failure, unwanted event that has negative consequences. According to

Wikipedia , “Risk is the potential future harm that may arise from some present

action.” While talking about risk, we need to think about the cost of it & the probability that it might occur.

Risk = Cost * Probability

Normally Risks can be accepted, prevented or transferred. We need to look at the degree to which we could change the outcome. Risks are divided into three general types: project, business, and technical risks.

2.2 What is Risk Based Testing

The important question that everyone has is “What makes Risk Based Testing different from traditional testing”. We all know that money is important in life, but the importance of it is different from people to people. There are lot of differences between the people who look for job satisfaction in terms of getting a challenging work to earn money & there are people who work for organizations only because of the high compensation package. Though Risks is identified in traditional way of testing, more emphasis on the Risk & building a test strategy around the identified risk makes Risk based Testing different from the Traditional Testing.

Most risk based testing is a black box testing approach .This technique checks the system against the requirement specification. Once the high prioritized risks are identified, testing strategy needs to be developed to explore on those high priority risks.

Our approach is more based on the Risk Analysis activity model shown in Figure 1. This model is taken from Karolak’s book “Software Engineering Risk Management”.

3. Our proposed method for Risk based Testing

3. Our proposed method for Risk based Testing

Our approach concentrates on the project level technical risks The Business level risks & Management level risks identification/tracking/mitigation is not a part of our approach. This section provides an overview of the various steps involved in the Risk based Testing methodology. The Case study provided in the section proves as the Proof of concept for the below described model.

The Test strategy includes the following steps:

3.1 Risk Identification

3.2 Risk based Testing strategy

3.3 Risk Reporting

3.4 Risk Prediction

3.1 Risk Identification

A risk is an unwanted event that has negative consequences. It’s highly important that the risks analysis should start from the starting of the testing life cycle.

3.1.1 Requirements Risk

Normally, the software testers review the requirements documents before starting the test case designing. Most of the time the requirements stay absurd or contradictory or misleading. Lot of research is going on how to measure the quality of requirements document. Here is a simple way of reducing the requirements level risk which leads to high COPQ when uncovered during later phases of testing. The assumption of this model is that the requirements document has more than 95% coverage of the product requirements & the tester’s role is to verify the requirement document for testability.

1.The requirements should not have any TBDs or TBAs.

2.Each requirement should be rated for the understandability, clarity & testability in the scale of 1 to 5 where 5 being the idealistic value. The product of the U (understandability), C (clarity) & T (testability) factors gives the risk factor for the requirement. The minimum threshold value for the risk factor is 45. Any requirement which is having the risk factor less than the minimum threshold value needs review & revision.

3.Each Module / Component should be rated for the requirements coverage on the following areas – Functionality requirements (FR), Usability & User Interface requirements (UR), and Security & Performance requirements (S&PR). 4.The product of FR, UR & S&PR gives the risk factor for the module or component. The minimum threshold value for the risk factor is 45. Any requirement which is having the risk factor less than the minimum threshold value needs review & revision.

5.Schedule a SGR (Stage Gate Review) with the development team, testing team & the other stakeholders of the project to review & revise the individual requirements and the modules which has not met the minimum threshold value.

Most of the requirements based risks are eliminated by following the above 4 steps. All of us should accept the fact that the risks in the Requirements are the most severe.

3.1.2 Customer CTQs based Risk MatrixThe various risks (project, business, and technical risks) need to be analyzed before starting the test planning. The following are the set of activities which needs to be followed in this phase. Software Testers needs to validate & confirm the Customer’s CTQs against their system understanding.

1. The tester needs to develop a QFD (Quality Function Deployment - Six sigma tool) to prioritize the relevant Testing Types(Functional Testing, User interface Testing , Security Testing, Performance Testing, etc) required for testing the project to meet the customer CTQs(Critical To Quality).

2. The tester needs to develop another QFD to prioritize those modules/components which needs immediate testing in order to meet the customer CTQs.

With the prioritized list of Modules/Components based on customer CTQs , Customer Priority based Component Risk Matrix needs to be developed. It is similar to ‘Component Risk Matrix’ as per James Bach style, but the difference being more emphasis on the Customer expectation based Risk prioritization.

SWOT Analysis technique & Assumption risk mitigation activities help in uncovering lot of technical risks. Clear communication of the risk is as important as the Identification of risks. The Customer Prioritization based Component Risk matrix will provide the clear details on the set of risks in each component of the system. 3.2 Risk based Testing Strategy

The High risk components identified from the above activity are sorted based on the Risk Factor. This list would serve as the input for carrying out the activities in this phase.

For each Component, identify the Smoke test cases which would uncover more defects in the risk prone areas identified in ‘Customer Priority based Component Risk Matrix’. Hence the risk based smoke test case is different from the normal smoke test cases which are developed in Traditional testing.

Each Test case should carry a RPN value (Risk Priority Value). For calculating the RPN value of a test case, provide the rating against Severity of the risk , Probability of risk occurrence & Detection capability of the risk (the ease in detecting the risk). The Range is defined as 1, 5, 9 where 9 being the highest.

The Test cases with RPN values listed against the release versions can be shared with the project stakeholders.

The Management & Customers could decide on the whether specific module level test cases needs to be prioritized or the Smoke test cases with the highest RPN values. 3.3 Risk Based Reporting

Risk reporting is based on the smoke test cases (risk based) identified during the previous topic. The defects uncovered during smoke testing needs to be reported in a meaningful way. It’s important to closely monitor the errors & collect the following metrics:

· Number of Smoke Test cases planned vs. executed vs. failed.

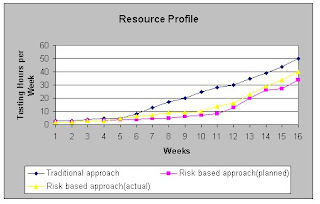

· The test effort planned vs. actual.

· Number of Planned releases(to testing team) vs. number of actual releases

· Number of rejected builds

· Number of resources working on the project

· Total number of defects identified during smoke testing.

· Total number of defects rejected.

· Number of rejected defects due to functional changes

· Number of rejected defects due to lack of understanding

· Total number of defects per module.

· Percentage of tests passed

· Percentage of tests failed

· The effort(in hours) required to identify a defect

· The average time interval between each defect.

· Total number of defects that does not has a test case

· Total number of test cases added at run time

· Number of defects per developer(if possible)

· Number of defects per defect type

· High Priority defects found vs. Medium or Low priority defects

· Total number of deferred defects

· Metrics about Defect Injection phase & defect reason would add value

The identified defects needs to be tracked against the risk of not fixing & it would help the development team to fix the high risk defects. The defects can be plotted as shown in the below graph (Figure 2) for easy interpretation of high priority risky defects. The defects listed in the top right corner marked as “1” needs to be concentrated first.

3.4 Risk Prediction

3.4 Risk Prediction

Risk prediction is derived from the previous activities of identifying, assessing & prioritizing, mitigating and reporting risks. Risk prediction involves forecasting risks using the history and knowledge of previously identified risks.

During test execution it is important to monitor the quality of each individual function & the metrics collected during the testing phase becomes the data for forecasting future risks.

4. The Case Study

The rest of this paper will discuss a case study using the risk based approach to software testing, relating the different activities discussed in the section 3. 4.1 System under Test

The Early Indicators Product Tracking is a process to track the performance of the in-service products based on Reliability analysis. This helps in proactively identifying the in-service products that are experiencing degraded reliability performance. This results in efficient spares management and warranty issues.

The system interacts with various types of databases (SQL, Oracle, COBOL & FoxPro) & collects the various details about the aircraft parts & derives the reliability of various parts.

The system accepts the data from various vendors & does a reliability analysis & provides various charts & reports which enable the vendor in identifying reliability factor of the part.

4.2 Challenges in TestingThe following are the key challenges in the project.

1. Limited Testing Time: As the project required new complex algorithms & services to be implemented, the development timeframe was more than the expected & hence the testing phase needs to squeeze. So it’s very critical to adopt a test strategy wherein the more critical core module which represents more risk needs to be tested first.

2. Limited Testing Resource: There were not much senior testing resources available at the time of the project. The project demands more competent test engineers who have knowledge on the System architecture as the testing involves testing of simulation model & window services. It is highly essential to plan for a resource to complete the testing as soon as possible without putting in efforts for Knowledge transitions or trainings.

3. Simulation Testing: It needs a White box testing approach as most of the time, the tester needs to adopt the reverse engineering strategy to the available data & attain the data integrity.

4. Database Relativity: The system talks with 4 different types of databases (SQL, Oracle, and FoxPro & COBOL) & tracks the reliability of the aircraft parts.

5. Integration with Third party Tools: As the system exposes an interface to interact with the Third party tools like Minitab, thorough testing on the critical areas is required. The limitations of the Third party tools should not interfere in the system testing. 4.3 Risk based Test Strategy

The EIPT application consists of seven modules. The following activities are done as part of the Test Strategy. 4.3.1 Requirements Risk identificationDuring the requirements document review by the testing team, the requirements of the above mentioned modules which are adhoc & absurd were identified & highlighted. Thus the requirements are validated & revised as per the Requirements Risk mitigation strategy. Each of the modules which lack in functional requirements coverage or Usability & User Interface requirements coverage or Security & Performance requirements coverage were identified & highlighted by following the strategy mentioned in section 3.1. The below table (Figure 3) provides the sample of how the module level requirements are validated & risk factor is derived.

Figure 3 : Module Risk Factor Calculation

After the end of the preparing the list of requirements & module which are vulnerable to failures, Stage Gate Review (SGR) was scheduled to review & revise the requirements. By validating the requirements against the below sample points would uncover a lot of risks:

Is any tool used for creating prototypes

Configuration Management tool not being identified for placing the project artifacts

Software tools to support planning & tracking activities not being used regularly

Requirements put excessive performance constraints on the product

Unavailability of tools or test equipment

Incomplete interface descriptions

Operation in an unfamiliar or unproved hardware environment causes unforeseen problems

Strict requirements for compatibility with existing systems require more testing, and additional precautions in design and implementation than expected

4.3.2 Customer CTQs based Risk Matrix

The objective of this activity to identify the prioritized set of modules based on Customer CTQs & analyzing /identifying the risks available in those modules. Normally the risk prioritization strategies concentrate on prioritizing the risks based on risk severity, meeting the deadlines, failure mode use cases, etc. Our proposed method is found to be more advantageous than the available methods as it concentrates & prioritizes the risks based on the customer expectation & delivers quality.

The VOC (Voice of Customer) was identified by having discussion with the customer & it helped in validating the customer expectations with the QFD developed during requirements phase of the project.

Then in order to prioritize the type of testing required for meeting the customer expectation, QFD was done. The relative rating as shown in the figure 4 shows that Functional testing is the high priority as per customer’s expectation.

Figure 4 : Prioritizing Testing Type using QFD Tool

Figure 4 : Prioritizing Testing Type using QFD Tool

In order to prioritise the modules for meeting the customer expectations, QFD was done. The relative rating showed the high priority module that needs more concentration as shown in Figure 5.

Figure 5 : Prioritizing the Modules using QFD Tool

The Customer priority based Component Risk Matrix was developed to identify the module level risks & the Risk factor is derived in order to prioritise those risks which are of interest to the customer. For identifying the risks in each module SWOT analysis & Assumption risk analysis are used. The below table(Figure 6) provides the list of risks in each module & the corresponding risk factor.

Figure 6 : Customer priority based Component Risk Matrix

4.3.3 Risk based Test case Design

After the risk assessment & prioritization, smoke test cases were identified against each of the modules. These smoke test cases emphasis on testing the risk areas / fault prone areas of the module. Each test case carry the 3 values - Severity rating(S) , Probability rating(P) & the Detection capability rating(D) & the RPN value was derived for each of the test cases.

The below table lists the sample set of test cases of various modules with their corresponding RPN values.

Figure 7 : Risk based Testcase Design Summary

Figure 7 : Risk based Testcase Design Summary

Figure 8 : Risk Report Metrics

Figure 8 : Risk Report Metrics